Imagine you’re a brand and you’ve contracted an agency or vendor to run a direct-response display ad campaign. You’ve allowed 30 days post-view and post-click ad attribution, along with allowing pixels (both retargeting and conversion) to be placed throughout your sales funnel.

After some initial hiccups, everything seems to be going well! Your CPA is consistently going down, your sales are going up, revenue is being tracked and fed back into your CRM system and it looks like you’re spending less money on media than you’re bringing in on sales. So everything’s perfect, and when the agency or vendor wants to double, or even triple the budget, how can you possibly say no?

That being said, a whisper comes from the back of your mind’s eye. You look at your topline sales numbers, and… that’s funny… despite all these new conversions, your sales haven’t dramatically increased. Certainly not as much as you would have thought. But the ads are working, right? The conversions are happening, or at least the agency/vendor says they’re happening. When suddenly, it hits you—what if the agency is taking credit for conversions that were going to happen without any media spend at all?!?

It’s a common story that’s a twist on the Wanamaker problem: you know which half of your media is claiming to be working, but a little more analytical effort is needed before you know what’s actually working.

An Ongoing Concern

Properly crediting view-through conversions is hardly a new problem, but with the increased emphasis on what Unilever CMO Keith Weed calls “The Three Vs (Value, Viewability and Verification)” it bears repeating and reexamination. Josh McFarland, CEO of TellApart (pre acquisition) wrote that counting view-through conversions exactly the same as click-thru conversions, particularly from retargeting campaigns, was tantamount to fraud and while “retargeting campaigns can prove that view-through value does exist,” it’s hard to parse out without running “costly control [tests].”

Direct Marketer Harry J. Gold warned ClickZ readers back in 2009 not to “count view-based conversions as conversions” and but rather as an “indicator of banner advertising’s influence on a consumer’s propensity to convert.”

Jeff Greenfield of attribution firm C3 Metrics is more blunt. Saying that view-through attribution doesn’t provide any lift is like saying that “TV, Radio or Out of Home” advertising doesn’t work, as no one clicks on a TV ad (at least not yet!).

Multiple sources, and my own experience in the space, indicates that correctly counting view-through conversions is essential for capturing the full impact of media spending, particularly for multi-touch campaigns where a single user can be shown a several different ad impressions across desktop, mobile and video.

That being said, the operative word here is “correctly” counting. If you’re doing site-visitor retargeting, you’ll inevitably see a significant number of view-through conversions come through that would have occurred regardless of any media spent. After all, if the converter wasn’t interested in the product already, they likely wouldn’t have visited the site in the first place. Giving view-through conversions total credit without any check on whether the conversions would have happened anyway, is a mistake.

Calculations

So how can marketers find out which of their view-through conversions are legitimate? A sales lift test helps to determine which view-through conversions would have occurred without any additional media spend.

The best, clearest explanation of a sales lift test was written by Craig Galyon, formerly of SwellPath. I’ve re-printed his steps below, with some additional color.

Take approximately 10% of your campaign budget. With this 10% budget, set up two identical campaigns, targeting the exact same audiences, running the buys at the exact same time. These two campaigns are clones, with the campaign running real, branded creatives called “Control”, the other running public service announcements (usually seen when ad space isn’t filled) called “Test.” Now if we’re doing good science, it’s important that the creatives from each campaign do not overlap with one another–you can use exclusion pixels to manage this.

For the control group, run the campaign as you normally would. For the test group, don’t run the campaign ads, run PSAs, public service announcement ads from the AdCouncil that have nothing to do with the product that you are advertising. Run them side by side—then after a while (I personally like taking a few weeks, though timing can vary), see how both perform.

Also, if you’re testing multiple media strategies—IE run-of-network, retargeting and third-party audience data, make sure to run a test for each strategy.

This might go without saying, but you can’t charge the client for the PSA’s you charge in a sales lift test. That’s a cost you’re just going to have to eat. This is what Josh McFarland was getting at when he wrote “Costly Lift [tests].”

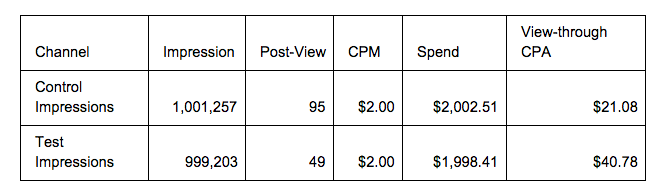

When the test is done, you’ll probably see something like this:

So, of the 95 conversions that your campaign drove, 49 of them likely would have happened anyway, regardless of anyspend.

To actually discount the conversions, it’s a pretty simple discrepancy check:

Discounted Impressions = (Branded Group – PSA Group)/(Branded Group)

In the above example:

Discounted impressions = ([95] – [49]) / ([95])

Discounted impressions = ([46]) / ([95])

Discounted impressions = 0.4842 or 48%

That means that of the 95 view-through conversions that occurred, only 48% of them are legitimate, giving us a true view-through conversion total of 46 conversions. In the above example, that takes us from a $21.08 CPA to a $43.53 CPA, a number closer to reality.

Further Diligence

Here are some additional thoughts on improving all things attribution.

Work With a Conversion Attribution Vendor. If you’re working with multiple partners, all running similar tactics, employing a conversion attribution company such as PlaceIQ, Converto or Adometry is a must for properly evaluating who gets credit for driving those conversions.

The way attribution companies such as these work is by capturing data (generally through a pixel-based process) across every impression that’s part of an overarching digital ad campaign. Then they determine which ads or how many ads caused a particular user to convert. While methodologies vary, credit is algorithmically assigned depending on the number of ads shown, whether or not the ads were in view, the response each ad got from the viewer, whether or not the viewer had shown prior conversion intent, among a host of other things.

Like most algorithms in digital advertising, credit from attribution companies can lead to gamification, which leads to less than ideal circumstances where you’ll see things like cookie bombing where vendors hammer certain audiences with low-cost ads as often as possible. “Hey” the thinking goes “we showed this one user that covered like 50 ads, therefore we deserve most of the credit.”

Some options to combat this is to be strict with partners and demand that ads be capped at a certain frequency, limit the number of partners that can do retargeting, as well as bringing retargeting spending in-house.

Weighing Viewability. Multiple sources confirmed that weighing viewable impressions was important, but there was no consensus on how it should work. Viewability is often included in the algorithms of verification companies, so if you’re using one of those, you’re likely using viewability as some sort of factor.

Compare Converted Users With Existing CRM Data. This is where things get nitpicky. If you’re driving sales and the sales have specific order ID to tie to a CRM system, you can tell what (if any) other marketing messages help drive that sale.

Let’s say that one of the view-through conversions was part of an e-mail list, they are a frequent shopper and they also used a coupon that you sent specifically to them. Those conversions can be attributed to other media.

Second, you see duplicate conversions—one from search and one from display. Based on user ID’s, and how they tie back to the conversion order ID. You can likely find out when someone first visited the page and when someone first searched. Use this to further attribute media.

There’s some inkling that the world economy is slowing, and that might mean a renewed focus on return on investment and the efficacy of media. And that means a spotlight on quality ads that truly drive sales.